Within days of its launch, the DeepSeek AI assistant -- a mobile app that gives a chatbot interface for DeepSeek R1 -- hit the highest of Apple's App Store chart, outranking OpenAI's ChatGPT cell app. The DeepSeek V2 Chat and DeepSeek Coder V2 fashions have been merged and upgraded into the new mannequin, DeepSeek V2.5. So you'll be able to have completely different incentives. And, ديب سيك per Land, can we actually control the long run when AI may be the natural evolution out of the technological capital system on which the world relies upon for trade and the creation and settling of debts? We design an FP8 combined precision training framework and, for the first time, validate the feasibility and effectiveness of FP8 training on an especially massive-scale mannequin. We then practice a reward mannequin (RM) on this dataset to foretell which mannequin output our labelers would prefer. If the export controls find yourself enjoying out the way that the Biden administration hopes they do, then you could channel a whole country and a number of monumental billion-dollar startups and corporations into going down these improvement paths. Therefore, it’s going to be exhausting to get open source to build a greater model than GPT-4, simply because there’s so many issues that go into it.

But, if you need to construct a mannequin better than GPT-4, you want a lot of money, you want lots of compute, you need rather a lot of information, you need loads of sensible folks. Quite a lot of instances, it’s cheaper to solve these issues because you don’t need a number of GPUs. You want a variety of all the things. Today, I battle so much with agency. So a whole lot of open-source work is things that you can get out rapidly that get curiosity and get more individuals looped into contributing to them versus numerous the labs do work that's perhaps less applicable within the quick term that hopefully turns into a breakthrough later on. But it’s very laborious to match Gemini versus GPT-4 versus Claude simply because we don’t know the architecture of any of those issues. You may solely determine these things out if you are taking a long time simply experimenting and attempting out. The unhappy thing is as time passes we know less and less about what the large labs are doing as a result of they don’t tell us, in any respect. But, if you need to construct a mannequin better than GPT-4, you want a lot of money, you want lots of compute, you need rather a lot of information, you need loads of sensible folks. Quite a lot of instances, it’s cheaper to solve these issues because you don’t need a number of GPUs. You want a variety of all the things. Today, I battle so much with agency. So a whole lot of open-source work is things that you can get out rapidly that get curiosity and get more individuals looped into contributing to them versus numerous the labs do work that's perhaps less applicable within the quick term that hopefully turns into a breakthrough later on. But it’s very laborious to match Gemini versus GPT-4 versus Claude simply because we don’t know the architecture of any of those issues. You may solely determine these things out if you are taking a long time simply experimenting and attempting out. The unhappy thing is as time passes we know less and less about what the large labs are doing as a result of they don’t tell us, in any respect.

What is driving that hole and the way could you count on that to play out over time? For example, the DeepSeek-V3 model was educated using approximately 2,000 Nvidia H800 chips over fifty five days, costing round $5.58 million - substantially lower than comparable models from other firms. The H800 cards within a cluster are connected by NVLink, and the clusters are connected by InfiniBand. After which there are some high quality-tuned information sets, whether or not it’s synthetic knowledge units or knowledge sets that you’ve collected from some proprietary supply someplace. Data is unquestionably at the core of it now that LLaMA and Mistral - it’s like a GPU donation to the public. Just by way of that pure attrition - individuals depart on a regular basis, whether or not it’s by choice or not by alternative, and then they discuss. We may talk about what among the Chinese firms are doing as effectively, that are pretty fascinating from my perspective. Overall, ChatGPT gave the most effective answers - however we’re nonetheless impressed by the level of "thoughtfulness" that Chinese chatbots display.

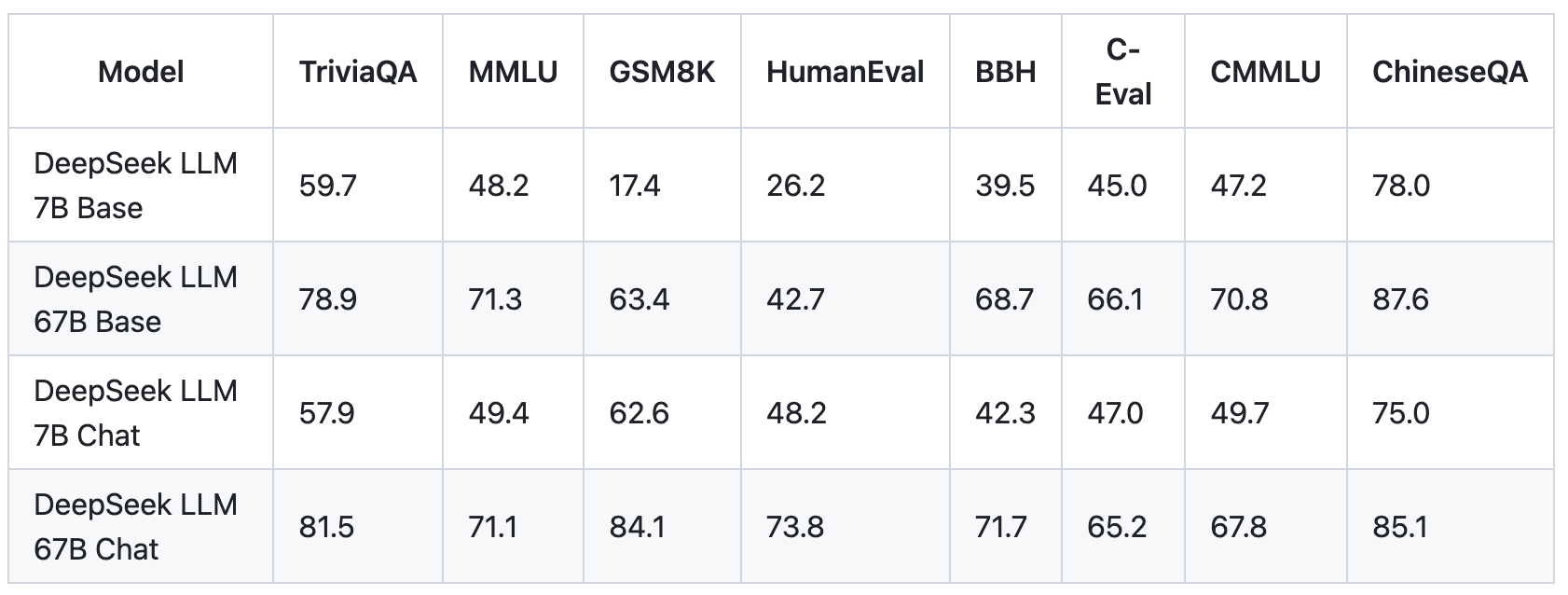

Even chatGPT o1 was not able to motive sufficient to resolve it. That is even higher than GPT-4. How does the data of what the frontier labs are doing - regardless that they’re not publishing - end up leaking out into the broader ether? That was shocking because they’re not as open on the language mannequin stuff. 1.3b-instruct is a 1.3B parameter model initialized from deepseek-coder-1.3b-base and positive-tuned on 2B tokens of instruction information. The open-source world has been actually nice at helping corporations taking a few of these fashions that aren't as succesful as GPT-4, however in a very slim domain with very specific and unique information to your self, you can also make them better. • Managing tremendous-grained reminiscence layout during chunked information transferring to multiple consultants across the IB and NVLink domain. From this perspective, each token will select 9 specialists throughout routing, the place the shared knowledgeable is regarded as a heavy-load one that can always be selected. Jordan Schneider: This idea of structure innovation in a world in which individuals don’t publish their findings is a extremely fascinating one.

|